LLM configuration

LLM (Large Language Model) configuration is the central hub for managing all LLM accounts and models used across the Yellow.ai platform. Instead of setting up models separately in each feature, you can configure them once and reuse them across modules such as AI Agents, Knowledge Base, Dynamic Chat nodes, and Super Agent.

By default, every workspace includes a Yellow-provided account (GPT-4.1) so you can start using LLM-powered features immediately. If you prefer, you can connect your own provider accounts (OpenAI, Azure OpenAI, AWS Bedrock, Anthropic) and assign them to specific modules or environments.

This centralized setup helps:

- Applies config to all modules where LLMs are used.

- Assign different accounts or models to Sandbox, Staging, or Production.

- Avoid repetitive setup across features

- Manage usage limits, roles, and audit logs in one place.

This document explains how to configure and manage LLM accounts on the Yellow.ai platform. It covers:

- Yellow-provided default accounts

- Configure your preferred LLM account

- Environment-specific configuration

- Usage limits

- Access control and audit logs

Default Yellow Account

Every AI agent on the Yellow.ai platform comes with a default LLM account provided by Yellow.ai. This account is pre-configured with the platform's preferred model (GPT-4.1).

With the default account:

- No manual configuration is required—you can start using LLM-powered features like AI Agents, prompts, workflows, and Knowledge Base Agents right away.

Configure Your Preferred LLM Account

Yellow.ai allows you to connect and use your own LLM provider accounts instead of the default Yellow-provided one.

Supported providers include:

- OpenAI

- Azure OpenAI

- AWS Bedrock

- Anthropic

To use a customer-owned account, add the provider credentials via the LLM Configuration section in platform settings.

When using your own LLM provider account, you are responsible for maintaining sufficient quota and availability to meet your traffic requirements.

Environment-Specific Configuration

The LLM Configuration page allows you to assign different LLM accounts and models for each environment—Sandbox, Staging, and Production.

This setup ensures:

- Safe testing – Experiment with new models or accounts in Sandbox or Staging before moving them to Production.

- Customization – Each environment can have its own account or model settings based on traffic, cost, or performance requirements.

Usage Limits

When using Yellow-provided LLM accounts, default rate limits are applied to ensure platform stability and fair usage across all AI agents.

The limits include:

- Per-request token limit: Maximum tokens allowed in a single request.

- Per-user, per-minute limit: Maximum number of LLM calls a single user can make per minute.

- Per-agent, per-minute limit: Maximum number of LLM calls across all agents under one AI Agent.

If you expect higher traffic or plan to go live with Yellow-provided credentials, request a production environment limit increase from the Yellow.ai support team in advance.

Access Control and Audit

Role-based access

- Only users with the Admin role can add, update, or delete LLM accounts.

- Super Admin privileges are required to make changes to LLM configuration in the Production environment.

Audit logging

- All configuration changes—such as account additions, deletions, or updates—are automatically logged.

- These logs are available under Audit Logs in Settings.

Access LLM Configuration

You can access LLM configuration in two ways:

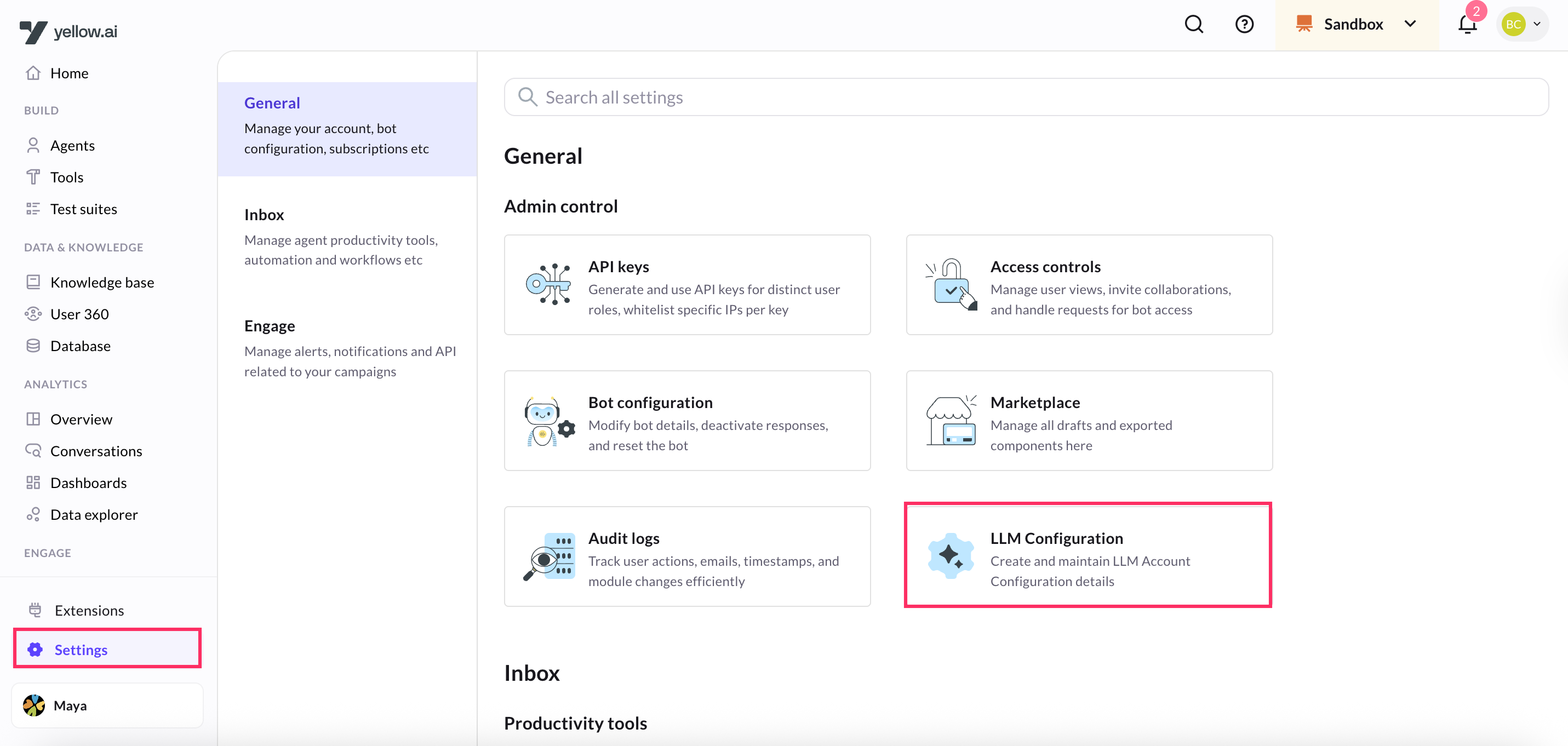

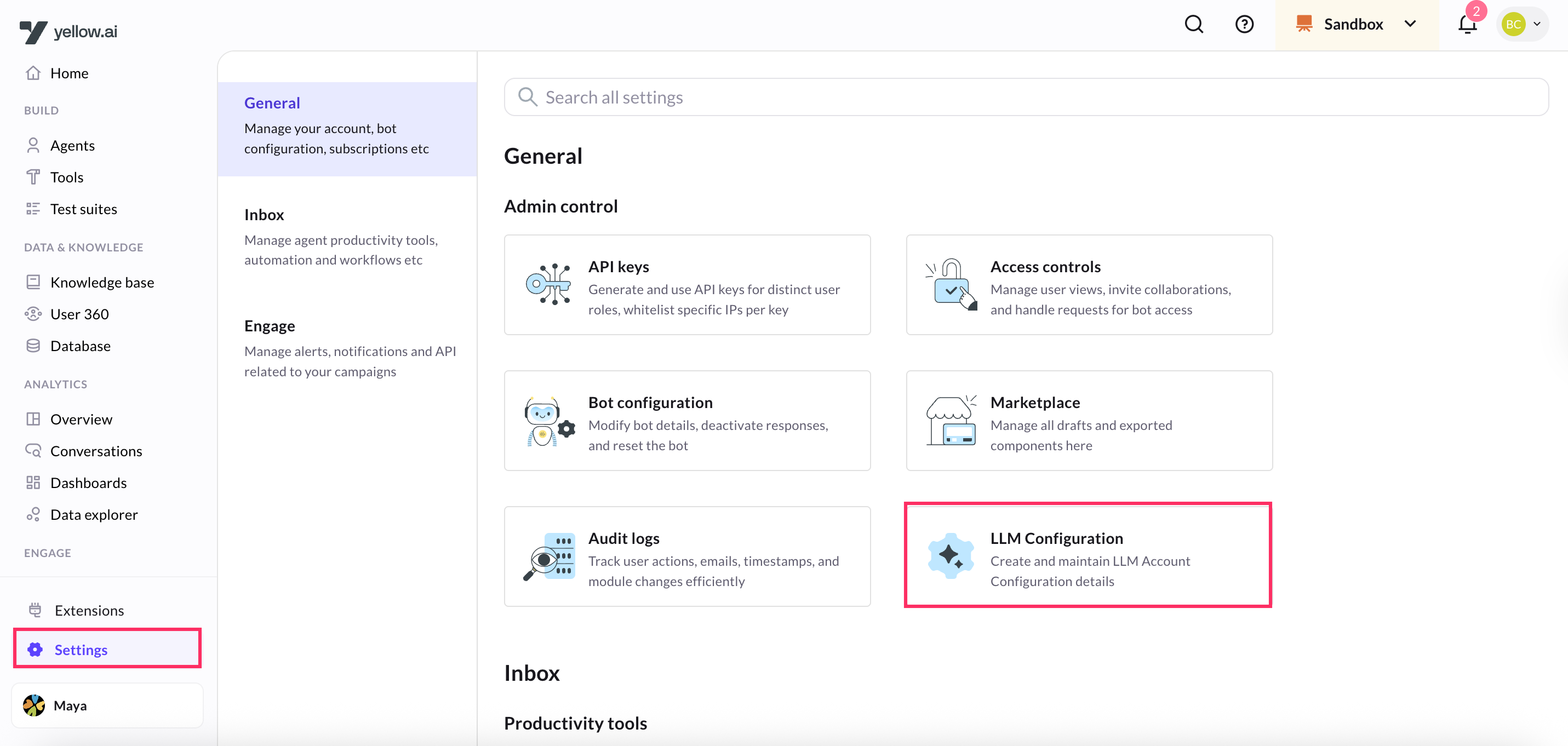

From the Settings Section

-

Go to Settings > LLM Configuration.

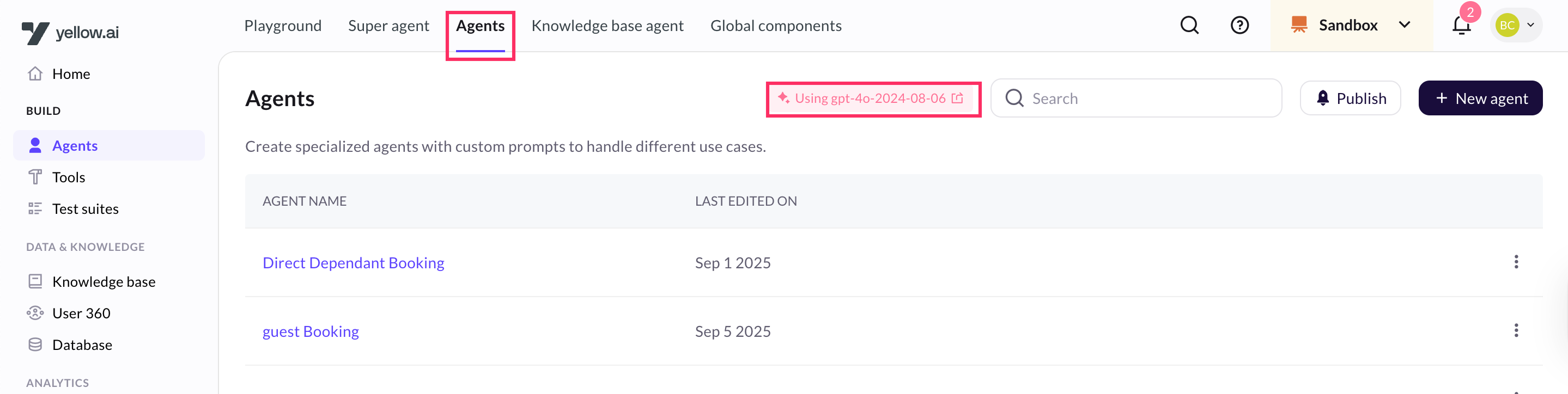

Directly from an LLM-Powered Module

-

Navigate to a specific LLM-powered module (Dynamic Chat Node, Conversations, Agent AI, or Knowledge Base).

-

Click the highlighted icon on the page.

- This will redirect you to the LLM Configuration page.

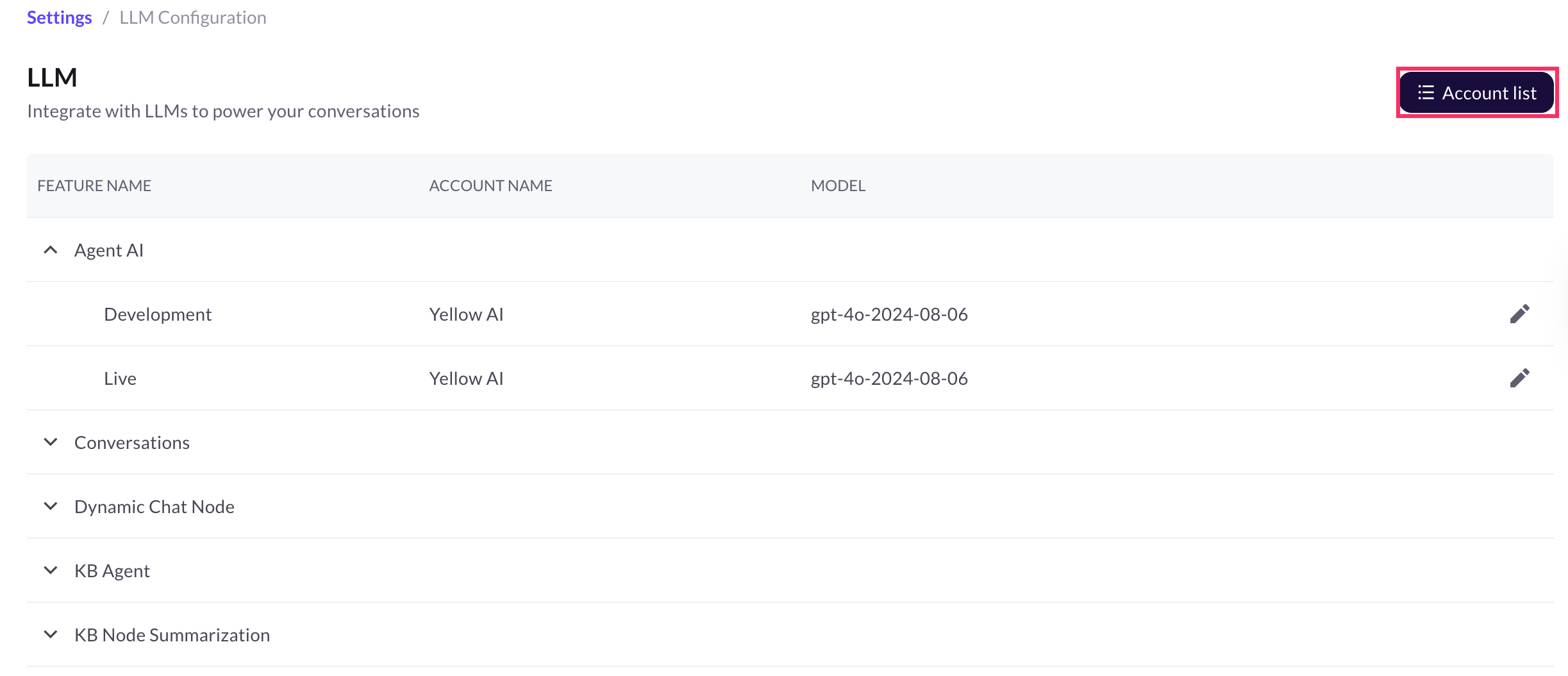

Manage LLM Accounts

In the LLM Configuration section, a Super Admin can create, edit, and disconnect LLM accounts, and switch models across environments.

Add LLM Account

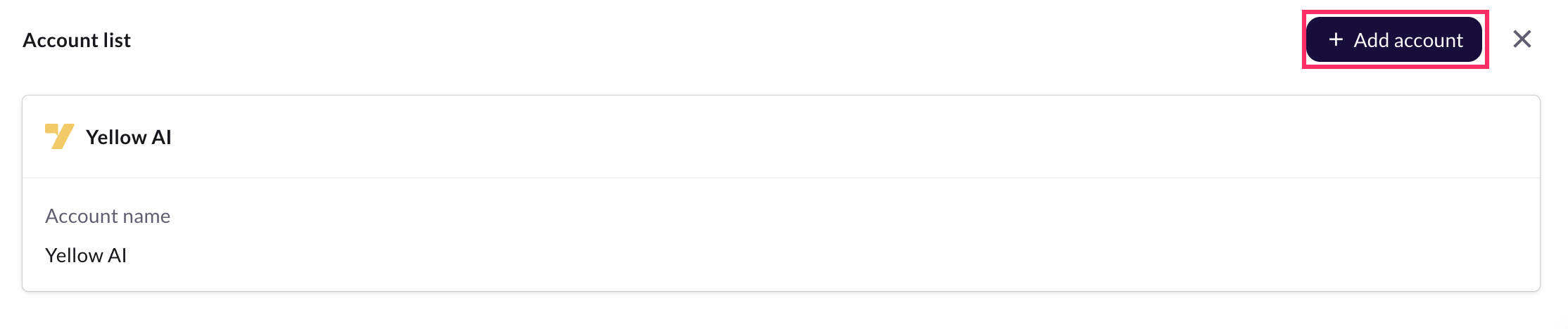

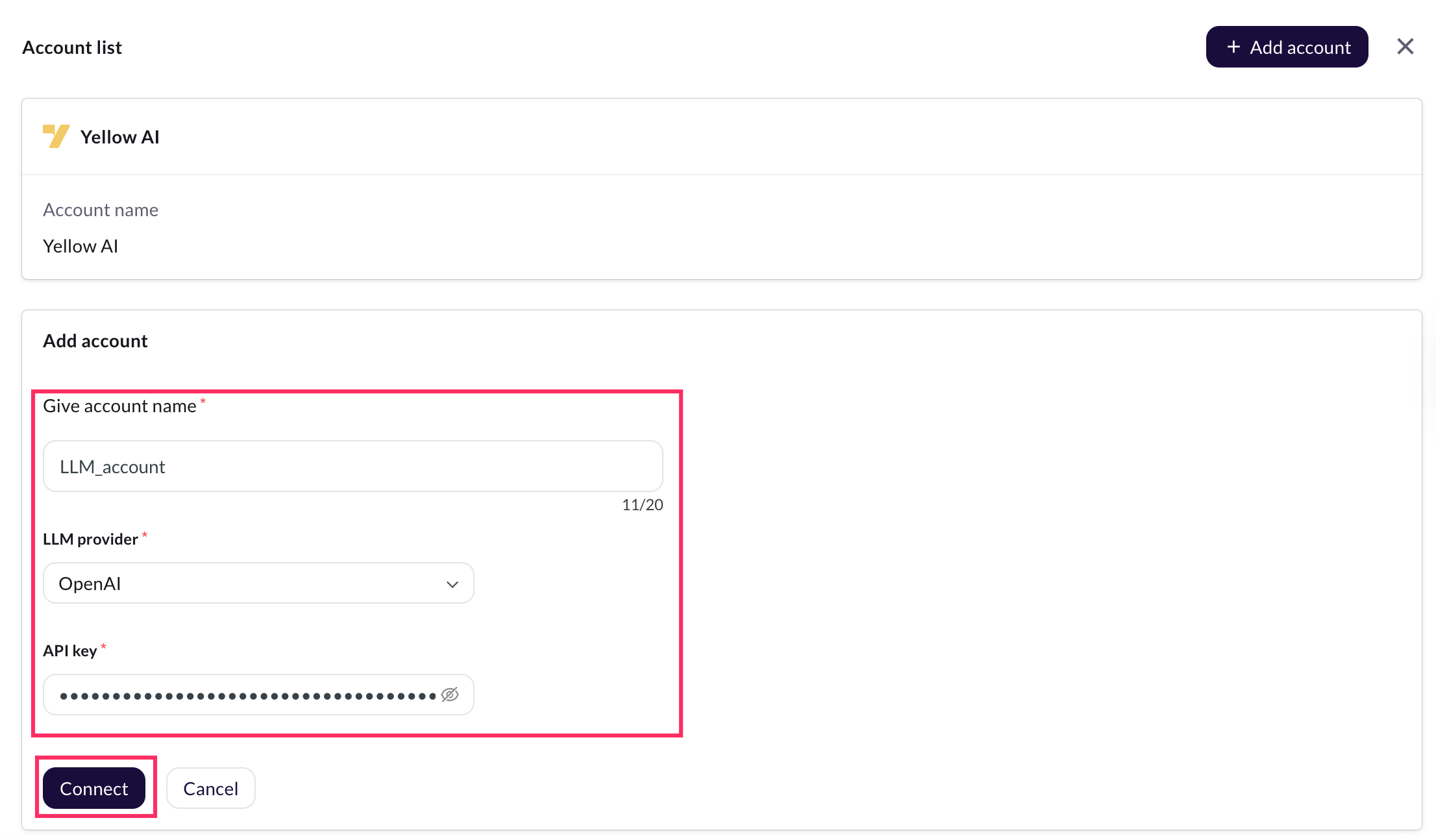

You can view the default Yellow account before adding a new one. If needed, you can create your own account to manage LLM-powered features.

-

Go to Settings > LLM Configuration.

-

Click Account List.

-

Click + Add Account.

-

Enter an Account name.

-

In LLM provider, select your preferred provider.

-

Enter the API key and resource (for example, from GPT-3.5 or GPT-4).

-

Click Connect.

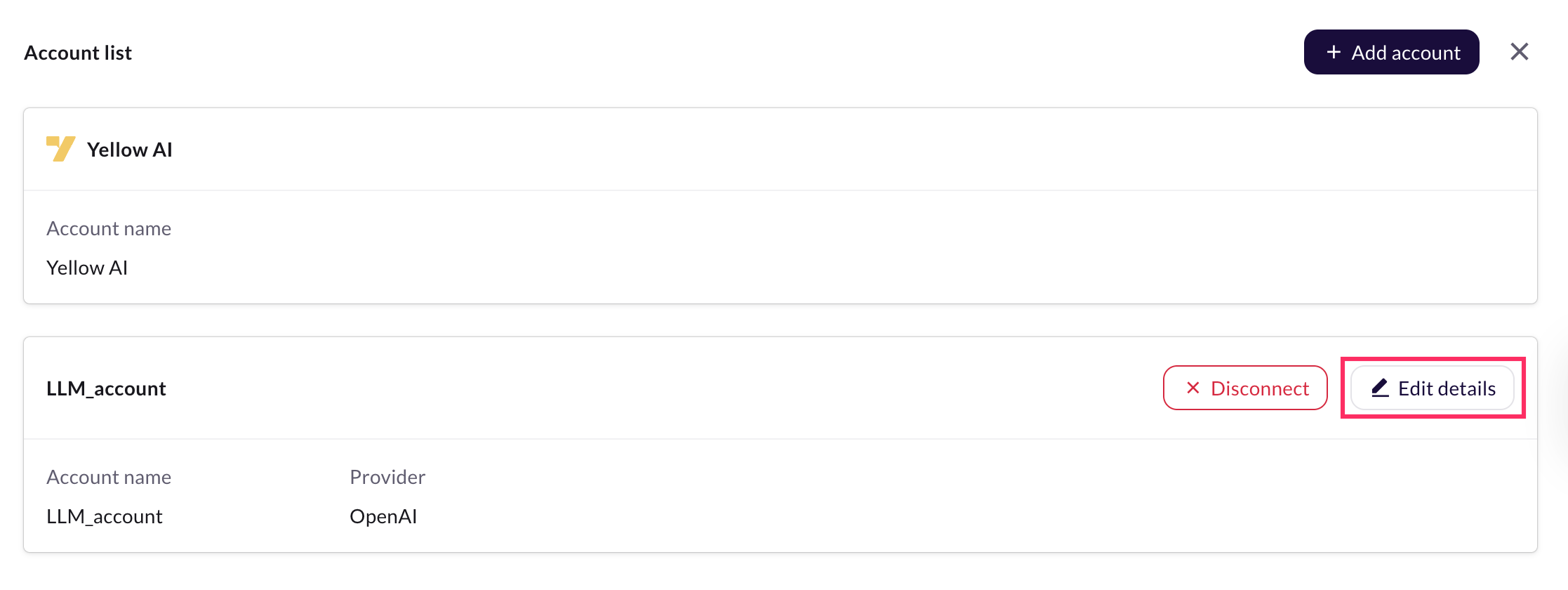

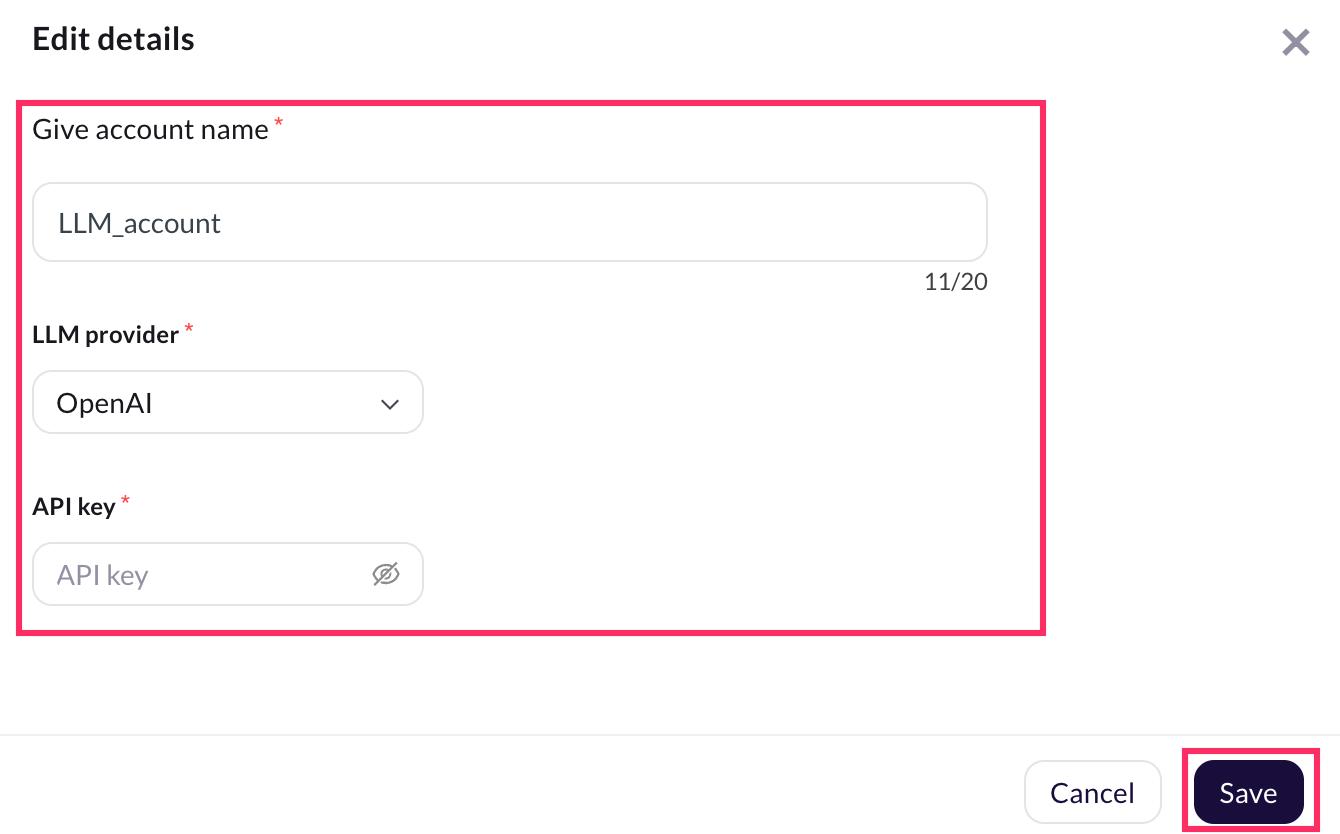

Edit LLM Account

You can update the details of an existing LLM account or provider if needed. You cannot edit or delete the default Yellow account.

-

Go to Account List > Add Account.

-

Navigate to the account you want to edit and click Edit details.

-

Update the required account details and click Save.

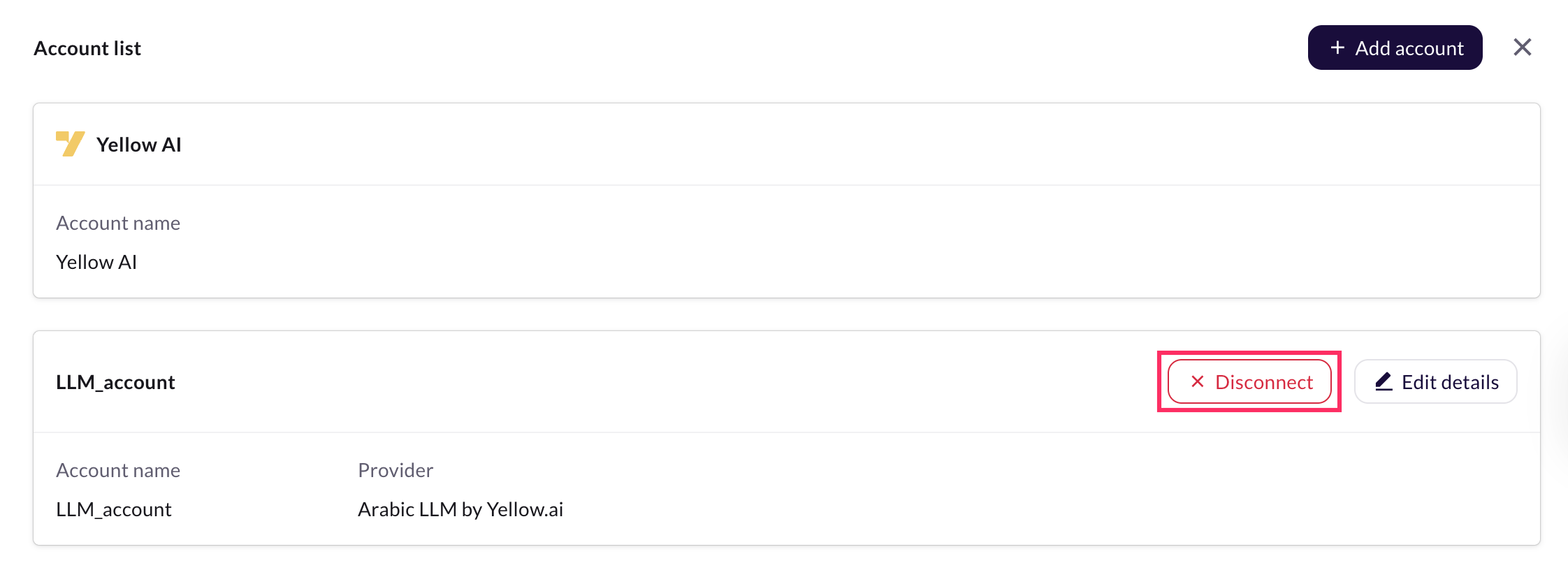

Disconnect LLM Account

You cannot disconnect the default Yellow account. However, you can disconnect any other account you have created.

Before disconnecting, ensure another account is available to handle all LLM features.

-

Select an alternative account that you want the module to use.

-

Go to Account List > + Add Account.

-

Navigate to the account you want to disconnect, then click Disconnect.

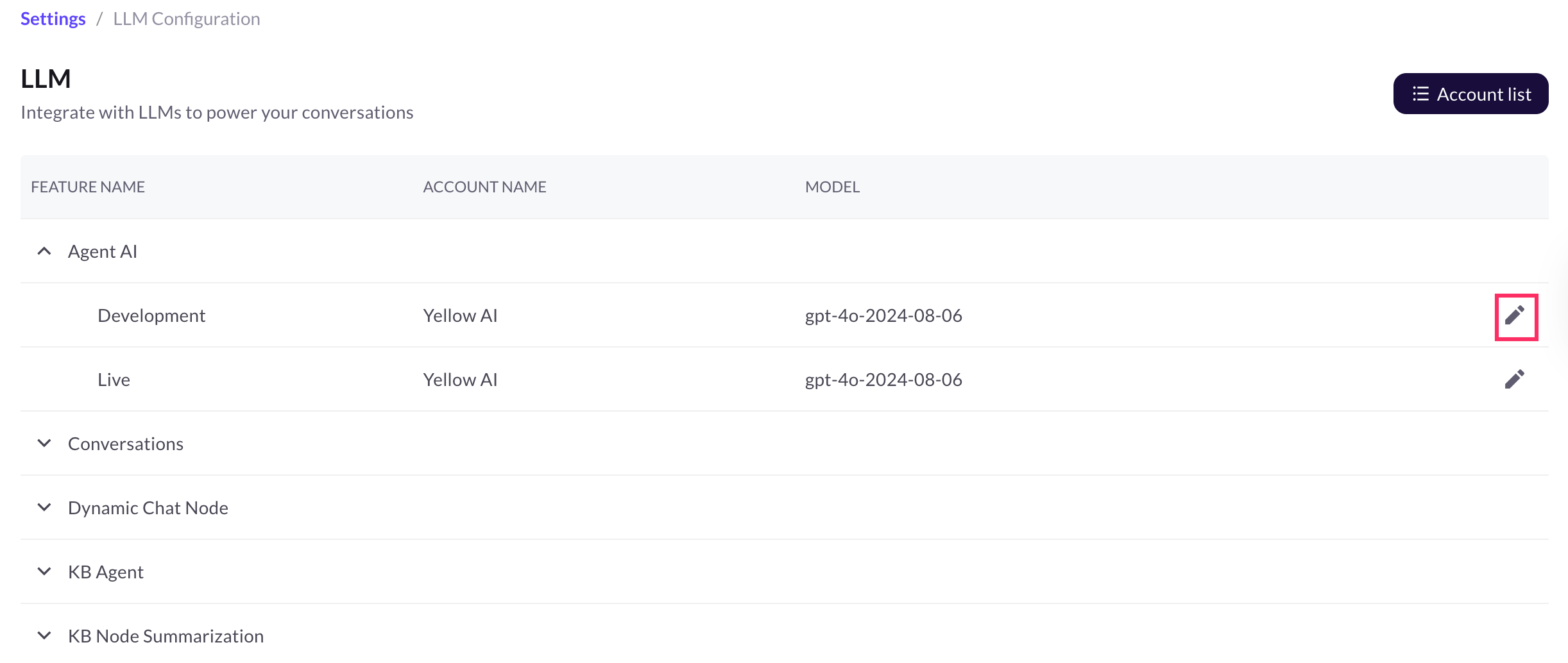

Edit LLM Configuration

By default, the Yellow account details are displayed for each environment. You can update the existing LLM model or account as needed.

For example: set the GPT-4.0 model in Staging and GPT-3.5 in Production.

-

Navigate to the specific feature where you want to edit the configuration.

-

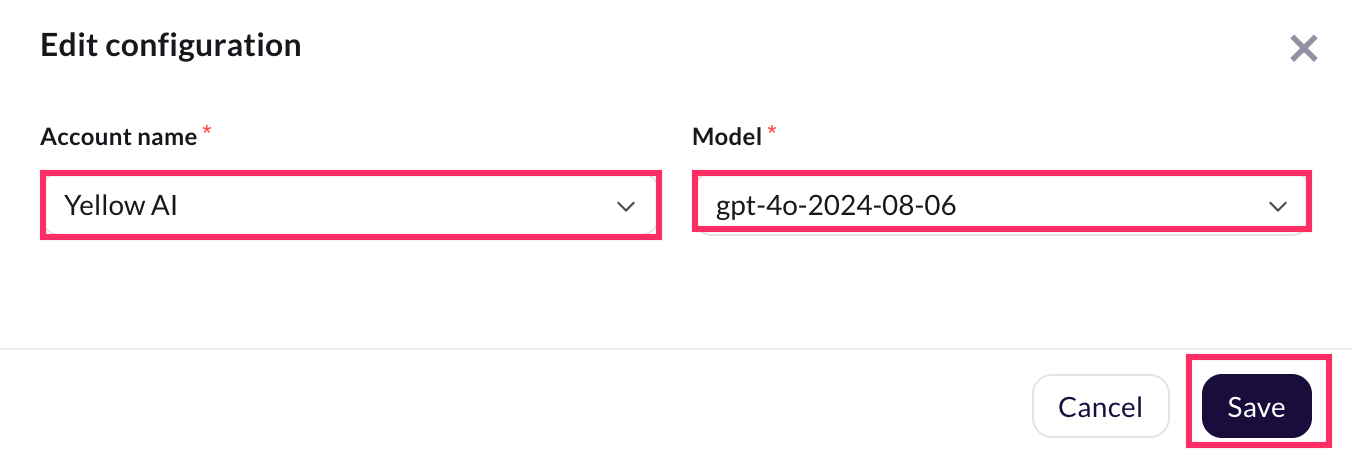

Expand the drop-down menu and click the Edit icon corresponding to the environment.

-

Update the Account name and Model as needed.

-

Click Save.

FAQs

Is an LLM required to use Yellow.ai?

No. The platform offers a wide range of features that work independently of LLM integration.

However, integrating an LLM enhances capabilities such as handling complex queries, generating dynamic responses, and improving FAQ interactions.

What happens if usage exceeds the platform rate limits?

Requests that exceed the threshold will be rejected. For per-minute limits, the quota resets automatically after one minute.

How can I check which rate limit is being hit?

You can verify this in the conversation logs, which indicate the type of rate limit that was exceeded.

If I publish a AI agent, will the LLM configuration from a lower environment move to the upper environment?

No. LLM configuration is not tied to the publish process. You must manually configure the settings in the Production environment.